#04_DANFOSS_A

Vision Based Component Picking

Context

In Drives we are working on automated assembly of the new iC7 frequency converters. As part of this, we strive to bring down cost and increase scalability of our solutions, and we must look towards new technologies in order to achieve this.

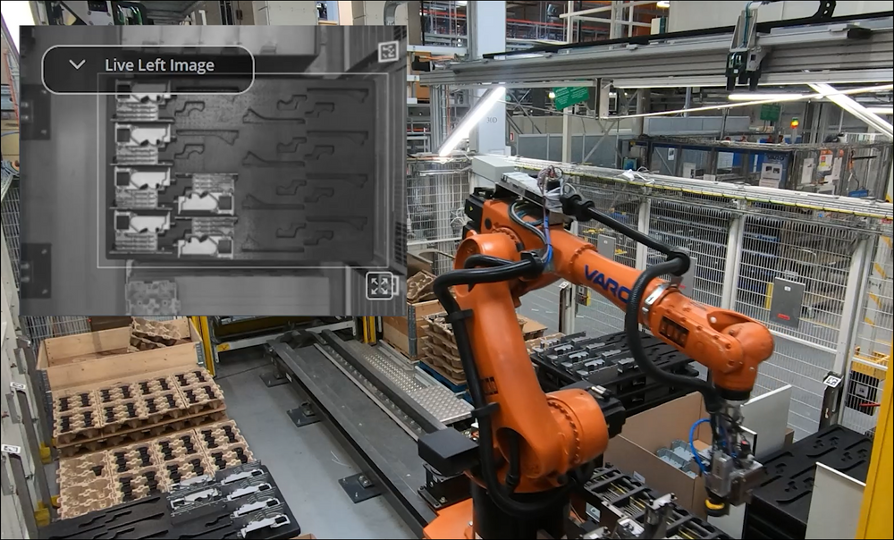

In the “legacy” production we have an automated picking and assembly solution (see attachment), in which we use a 3D vision system from Roboception. This is mounted on a rail that “flies” above pallets with components in order to localize components before a robot picks the components (video can be provided).

The total vision solution is very expensive (approx. 140.000 EUR), so we are looking for alternatives for component localization. It could be, that a 2D wrist-mounted camera with AI capabilities could be sufficient, as we accept compromising cycle times.

Challenge

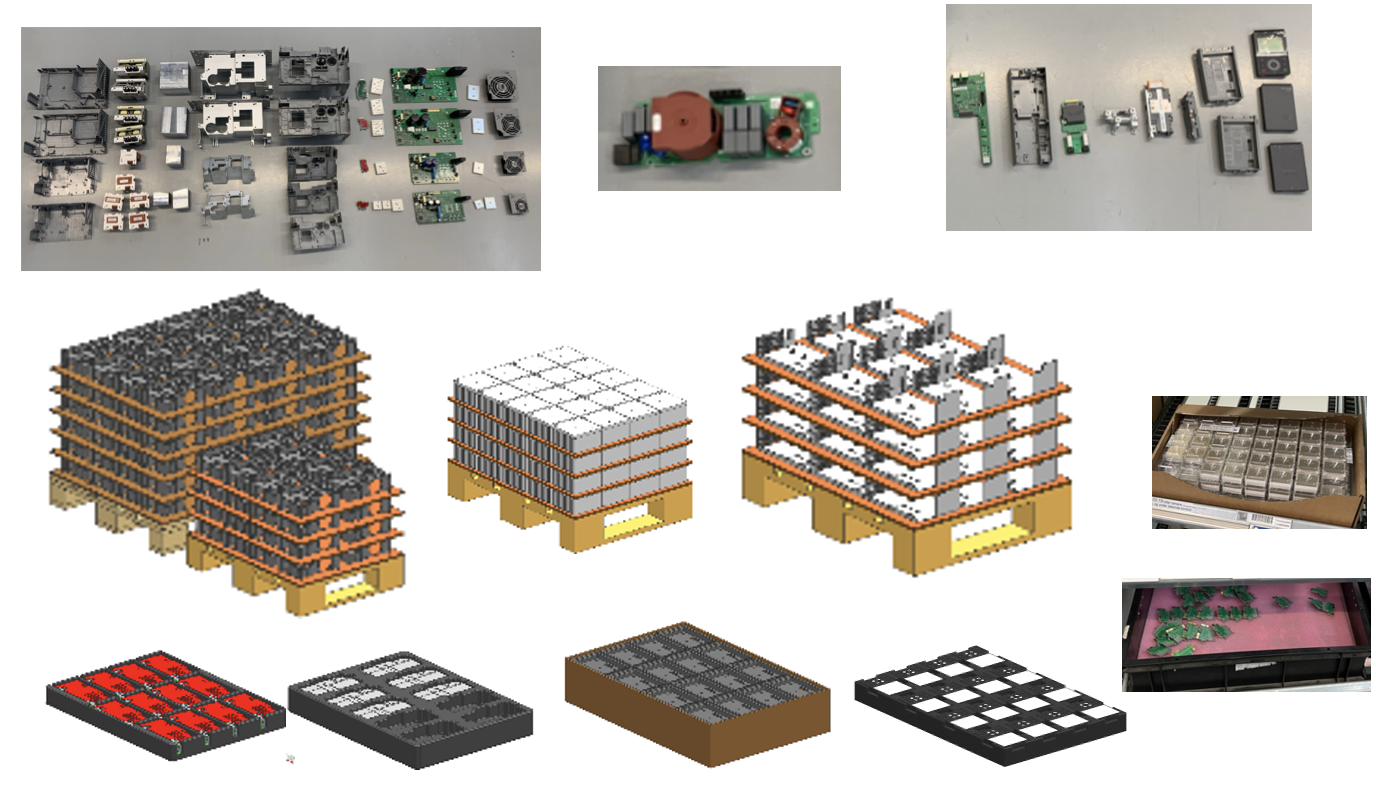

A lot of operator time is currently spent on kitting* parts for assembly – and this will also be the case moving forward. So one of the really interesting door-openers would be to develop a simple localization (= Vision) system used in connection with a kitting robot. The kitting robot does not need to grasp the parts very accurately, as it would just need to “drop” the parts on a flat kitting tray, thus the requirements for the vision system is more “loose”.

(*Kitting is not the same as assembly. It is just picking different parts from packaging and placing them on a tray that afterwards will be moved to an assembly station)

If it is possible to develop this vision system fairly simple, it opens up for many new applications, where we have High-Mix-Low-Volume products. Also it would provide better capacity scalability options due to smaller and cheaper installations.

It must also be relatively fast to setup and program, as we have a lot of different part variants/geometries. Thus an AI driven solution may be the right one.

Tools, methods and materials

Physical parts, CAD data

Ideal outcome for the company

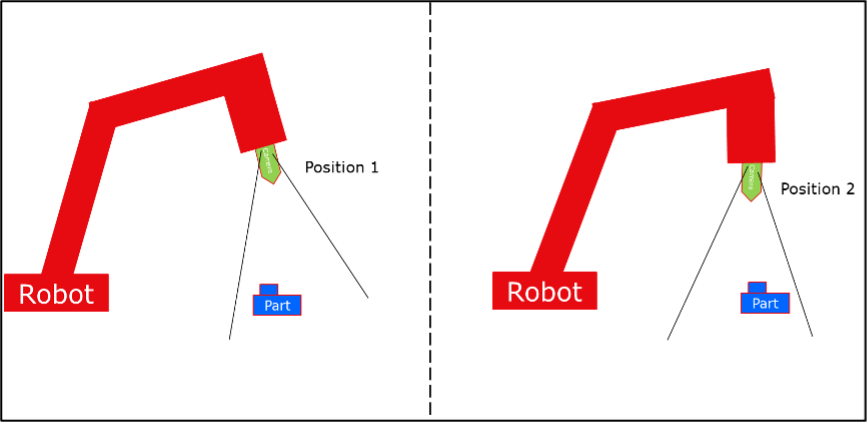

Localization of components by simple 2D camera, wrist-mounted on a robot (e.g. UR20). It could be looking something like this:

If 3D data is needed, then maybe the robot/camera could be moved to 2 different positions to acquire 2 different images.

A really ideal outcome would be, that new components would be easy to “teach”. This could be an element of competition, if more than one group of students selects this topic.